How brains synchronize during cooperative tasks

Humans are social creatures. But what leads to them being this way? To fully understand how the brain gives rise to social behaviors, we need to investigate it during social encounters. Moreover, we need to analyze not only the internal operations of one brain during social activities but also the dynamic interplay between multiple brains engaged in the same activity. This emerging research field is referred to as “second-person neuroscience” and employs hyperscanning (the simultaneous recording of the activity of multiple brains) as the signature technique.

The earliest hyperscanning studies used functional near-infrared spectroscopy to analyze brain synchronization between participants engaged in experimentally controlled motor tasks. These tasks, however, were not very natural, and the brain synchronization emerging from them may simply have been the result of simultaneous motor action.

In contrast, recent hyperscanning studies employ more natural and free settings for the shared tasks, allowing for nonstructured communication and creative objectives. While this produces more interesting results, a greater effort is needed to extract specific social behaviors from the video footages and link them to particular brain synchronization patterns. In turn, this limits analysis to short-term social interactions, which represent a tiny subset of possible human social behaviors.

Now, a research team led by Yasuyo Minagawa of Keio University, Japan, has worked out an elegant solution to this problem. In their paper published in Neurophotonics, the team presented a novel approach in which they used computer vision techniques to automatically extract social behaviors (or “events”) during a natural two-participant experimental task. Next, they used an event-related generalized linear model to determine which social behaviors could be linked to particular types of neuronal synchrony, both between brains and within each participant’s brain.

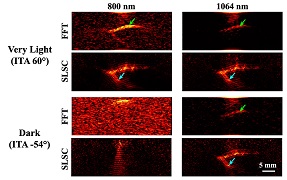

Each pair of participants (39 pairs in total) engaged in a natural, cooperative, and creative task: the design and furnishing of a digital room in a computer game. They were allowed to communicate freely to create a room that satisfied both. The participants also completed the same task alone as the researchers sought to compare between-brain synchronizations (BBSs) and within-brain synchronizations (WBSs) during the individual and cooperative tasks. The social behavior that the team focused on during the tasks was eye gaze, that is, whether the participants directed their gaze at the other’s face. They automatically extracted this behavior from the video footage using an open-source software, which made the data analysis easier.

Overview of the experimental setup used to study brain synchronization during cooperative tasks. (I) Participants had to design the interior of a digital room together, and a computer vision system kept track of their gaze to pinpoint the social behavior of looking at the other participant’s face. (II) The participants also completed the same task individually. (III) While they completed the experiment, their brain activity was recorded. Statistical analysis was then used to assess between-brain and within-brain synchronization of various cerebral regions. Credit: Xu et al., doi 10.1117/1.NPh.10.1.013511

One of the most intriguing findings of the study was that, during cooperative play, there was a strong BBS among the superior and middle temporal regions and specific parts of the prefrontal cortex in the right hemisphere, but little WBS in comparison. Moreover, the BBS synchronization was strongest when one of the participants raised their gaze to look at the other. Interestingly, the situation reversed during individual play, showing increased WBS within the same regions.

According to Minagawa, these results agree with the idea that our brains work as a “two-in-one system” during certain social interactions. “Neuron populations within one brain were activated simultaneously with similar neuron populations in the other brain when the participants cooperated to complete the task, as if the two brains functioned together as a single system for creative problem-solving,” she explains. “These phenomena are consistent with the notion of a ‘we-mode,’ in which interacting agents share their minds in a collective fashion and facilitate interaction by accelerating access to the other’s cognition.”

Overall, this study provides evidence hinting at the remarkable capability of the human brain to understand and synchronize with others’ when the situation calls for it. Minagawa has high hopes for the experimental strategy used and envisions further research with other types of social cues and behaviors in various types of tasks. “We could apply our method to more detailed social behaviors in future analyses, such as facial expressions and verbal communication. Our analytical approach can provide insights and avenues for future research in interactive social neuroscience,” she concludes. Of course, new techniques that can effectively record and process more complex social behaviors will be needed.

While there is certainly much work left to do, ingenious applications of technologies like computer vision and statistical models can enable a better understanding of how our brains (co)operate during social activities.

Read the Gold Open Access article by M. Xu et al., “Two-in-one system and behavior-specific brain synchrony during goal-free cooperative creation: an analytical approach combining automated behavioral classification and the event-related generalized linear model,” Neurophotonics 10(1), 013511 (2023), doi 10.1117/1.NPh.10.1.013511

The article appears in the Special Section Celebrating 30 Years of Functional Near Infrared Spectroscopy, guest edited by David Boas, Judit Gervain, David Highton, Yasuyo Minagawa, and Rickson Coelho Mesquita for Neurophotonics.

| Enjoy this article? Get similar news in your inbox |

|